Originally published on Medium.com, May 14, 2025

In this four-part series that laid out a human-centered blueprint for ethical AI, I began by exploring a deeper question at the heart of emerging AI technologies: What does it mean to keep humanity at the center of systems designed to outpace us? During this journey, I explored how reframing UX as human-centered intelligence can help organizations challenge and move beyond AI hype and inevitability, embed benevolence into design, and adopt intentional, ethical stewardship of intelligent systems.

In the first article, I introduced the concept of human-centered intelligence as an alternative to the reality where the rush to implement AI is pushing us forward with potentially negative impacts for the people it’s meant to serve.

What does it mean to keep humanity at the center of systems designed to outpace us?

This question, posed recently by Tristan Harris in his April 2025 TED talk, has occupied my thoughts. The answer to keeping humanity at the center of advanced technology evolution isn’t simply about making sure we can create better interfaces, reduce friction in workflows, or increase conversions. It’s about ensuring that AI doesn’t cause us to abandon our values or forget the people these systems are meant to serve. Stated differently: we need to ensure we maintain focus on and preserve the full human-centric benefit of the exciting potential offered by these advanced technologies.

While my background is rooted in user experience (UX), I also have experience in product design and innovation. I’m also a member of the PurposeFully Human community, a collective of professionals committed to reimagining how technology, design, and organizational practices can serve human flourishing.

My perspective allows me to say clearly that what’s needed now goes beyond the traditional boundaries of design. We need a new kind of intelligence embedded into AI development, something I am choosing to call human-centered intelligence, that can guide organizations toward ethical, grounded, and compassionate innovation. Without it, we risk creating technologies that scale harm as quickly as they scale solutions.

AI Hype and the Misalignment of Intent

It’s difficult to open an email or article without mention of AI. While I completed Google’s Startup School for GenAI and regularly use tools like ChatGPT and Gemini, I still approach AI cautiously. I have definitely seen how it has enhanced my work. From a broader perspective, I’ve also seen firsthand how AI, when thoughtfully applied, can rapidly create sustainable positive impacts on a broad scale — in one case, having a measurable positive impact on the environment as a result of changing internal business processes. This is a great example of the benefit AI can provide when it’s aligned with the public good.

Even so, I remain cautious. These moments of benefit are too often the exception rather than the norm and typical experiences are surrounded by hype, shallow use cases, and a rush to scale. Many of the use cases that I read and learn about leave me pondering the deeper question: who is this really serving, how responsibly, or why was this even introduced?

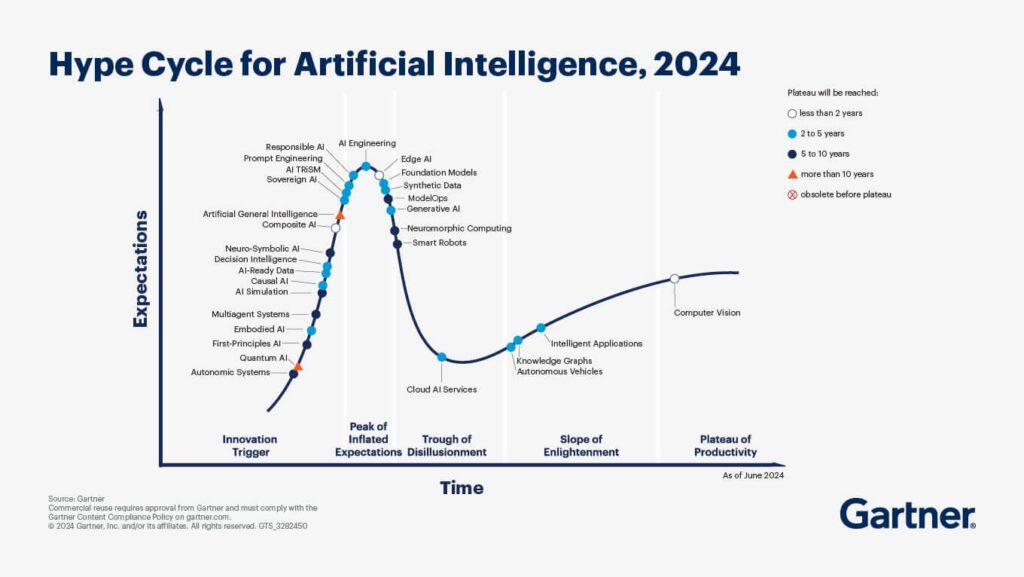

According to the Gartner Hype Cycle for AI report released late in 2024, much of GenAI has passed the Peak of Inflated Expectations and is approaching the Trough of Disillusionment. This reflects the reality that organizations may have overestimated AI’s promised value, misunderstood core problems they wish for AI to solve, or underestimated the complexity of appropriate AI implementation. In this type of scenario, too many AI applications are solutions looking for problems, created for the sake of owning the narrative rather than improving human experience by solving big problems.

The opinions expressed above are my own and do not reflect the views of my employer or any affiliated organizations.